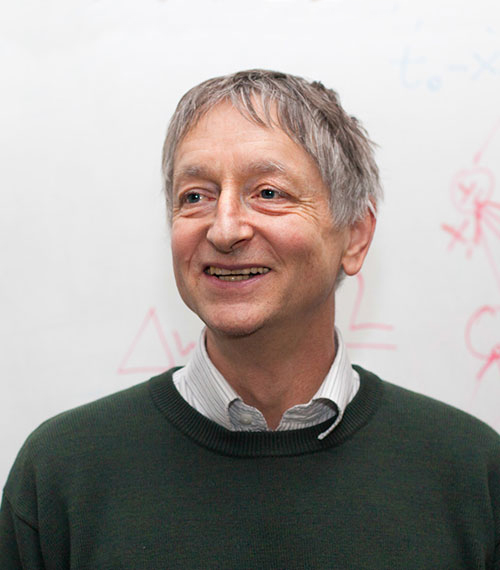

After completing a BA in Experimental Psychology at Cambridge University (1970) and a PhD in Artificial Intelligence at the University of Edinburgh (1978), Geoffrey Hinton (London, United Kingdom, 1947) held teaching positions at the universities of California, San Diego and Carnegie-Mellon (United States), before joining the faculty at the University of Toronto (Canada).

From 1998 to 2001, he led the Gatsby Computational Neuroscience Unit at University College London, one of the world’s most reputed centers working in this area. He subsequently returned to Toronto where he is currently Professor in the Department of Computer Science. Between 2004 and 2013, he headed the program on Neural Computation and Adaptive Perception of the Canadian Institute for Advanced Research, one of the country’s most prestigious scientific organizations, and since 2013 has collaborated with Google as a Distinguished Researcher on the development of speech and image recognition systems, language processing programs and other deep learning applications.

Hinton is a Fellow of the British Royal Society, the Royal Society of Canada and the Association for the Advancement of Artificial Intelligence. He is also an Honorary Member of the American Academy of Arts and Sciences and the U.S. National Academy of Engineering. A past president of the Cognitive Science Society (1992-1993), he holds honorary doctorates from the universities of Edinburgh, Sussex and Sherbrooke.

His numerous distinctions include the 2016 NEC C&C Prize, the David E. Rumelhart Prize for outstanding contributions to the theoretical foundations of human cognition (2001) and the IJCAI Research Excellence Award (2005) of the International Joint Conferences on Artificial Intelligence Organization, one of the world’s most prestigious in the artificial intelligence field. He also holds the Gerhard Herzberg Canada Gold Medal of the Natural Sciences and Engineering Research Council (2010) – considered Canada’s top award for science and engineering – and the James Clerk Maxwell Medal bestowed by the Institute of Electrical and Electronics Engineers (IEEE) and the Royal Society of Edinburgh (2016). Since receiving the BBVA Foundation Frontiers of Knowledge Award (2016), he has gone on to win the Nobel Prize in Physics jointly with John Hopfield (2024).

Speech

Information and Communication Technologies 9th edition

Micro interview

“Probably machines will get smarter than people in almost everything, but it’ll take a long time”

Geoffrey Hinton believes that sooner or later machines will learn to do everything the human brain does.

Geoffrey Hinton believes that sooner or later machines will learn to do everything the human brain does.

TUITEAR

So what time scale are we talking about? “Over five years,” says Hinton, since beyond this point “it’s safer not to make predictions.” As the mind behind the remarkable boom that has recently swept the field, auguring profound social and economic changes, he knows better than most that information technologies advance in fits and starts. Hinton, winner of the Frontiers of Knowledge Award in Information and Communication Technologies, has drawn on the way humans learn in order to work towards the creation of the first machines able to learn for themselves.

The technology in question, known as deep learning, has already provided us with image and speech recognition tools, machine translators and driverless vehicles. Deep-learning applications have also been rolled out in biomedicine, security and other areas where the need exists to extract information from massive data sets. And this is just a taste of the potential of the new artificial intelligence (AI), with machines which, according to Hinton, “will make our lives a whole lot easier.”

As recently as a decade ago, few would have wagered on this resurgence of AI. Research into machine learning was progressing, certainly, but the pace was frustratingly slow. Specialists had been trying out different approaches. One consisted of programming the machine with vast amounts of knowledge: in order to teach it, for instance, what a cat was, so it could recognize one on “sight.” But then, how could it be programmed to distinguish between a real and a toy cat or some other kind of medium-sized feline? With this strategy, the prior knowledge the machine must digest only gets bigger and bigger.

An alternative is to train the machine by means of correct and incorrect examples, so it gains “experience.” This is the goal pursued by artificial neural networks, programs that operate as interconnected units along the lines of biological neurons. The first neural networks, however, failed to live up to their promise, and most researchers came to see them as basically a dead end.

Hinton was not part of this majority. Fascinated from his teenage years by the functioning of the human brain, he made the conscious choice to persevere with neural networks. After graduating from the University of Cambridge in 1970, with a BA in Experimental Psychology, he went on to the University of Edinburgh, where in 1978 he earned a PhD in Artificial Intelligence with a thesis on neural networks. For years, he recalls, his thesis advisor urged him to switch fields “on a weekly basis.” His reply never varied: “Give me another six months and I’ll prove to you that it works.”

Unable to raise the necessary research funding in his home country, he opted to emigrate, first to the United States and subsequently to the University of Toronto, in Canada. Still determined to find inspiration in the brain, he formed a group there in 2004 made up of experts in computing, electronics, neuroscience, physics and psychology. Deep learning was about to be born.

Against all expectations, in 2009 Geoffrey Hinton and his students developed a neural network for voice recognition that improved substantially on the incumbent technology; a product, in turn, of thirty years’ work. In 2012 a sophisticated network comprising 650,000 “neurons,” and trained with 1.2 million images, managed to reduce the object recognition error rate by almost half. The equivalent, returning to cats, of the machine learning to identify the animal without anyone having previously described it. Hinton has since 2015 combined his professorship at the University of Toronto with the post of vice-president engineering fellow at Google.

What lies behind the success of the new AI? In no small measure, Hinton’s algorithms, whose workings he explains thus: “The human brain works by changing the strengths of the connections between its billions of neurons; to get a computer to learn, we can try to reproduce this process, identifying a rule that changes the connection strengths between artificial neurons.”

“The human brain works by changing the strengths of the connections between its billions of neurons; to get a computer to learn, we can try to reproduce this process, identifying a rule that changes the connection strengths between artificial neurons.”

TUITEAR

Hinton’s algorithms mimic the biological process of learning and turn it into mathematics. This is the point where machine training comes into play. If a test image is recognized, the neural network connections that have led to the right answer are reinforced – become stronger. The machine “learns” when, after lengthy training, the least productive connections are weakened or eliminated. The process rests on the mathematical method known as backpropagation, created by Hinton, which allows the network to reliably check its right and wrong responses and adjust its connection strengths accordingly.

Deep learning is, in essence, “a new kind of artificial intelligence,” explains Hinton, where “you get the machine to learn from its own experience.” As a technique, it has also gained maximum mileage from two other advances in computation: the leap in calculating power and the avalanche of data becoming available in every domain. Indeed many see deep learning and the rise of Big Data as a clear example of computational co-evolution.

“Years ago I put my faith in a potential approach,” says Hinton contentedly, “and I feel fortunate because it has eventually been shown to work.”